In this section, we will cover the simple installation procedures of Spark and Jupyter.

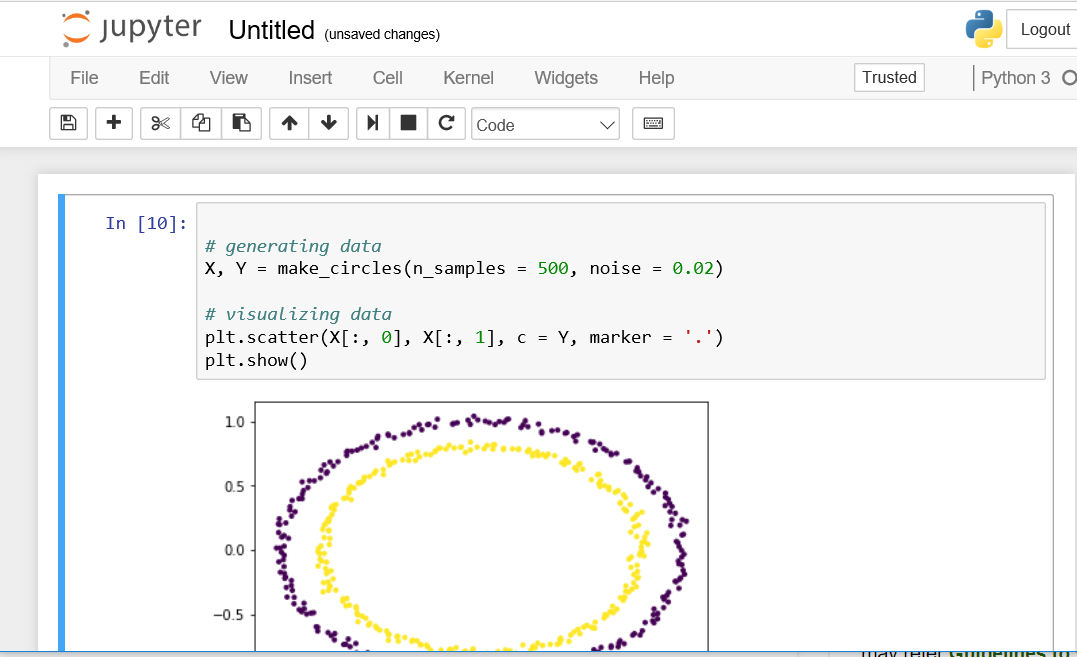

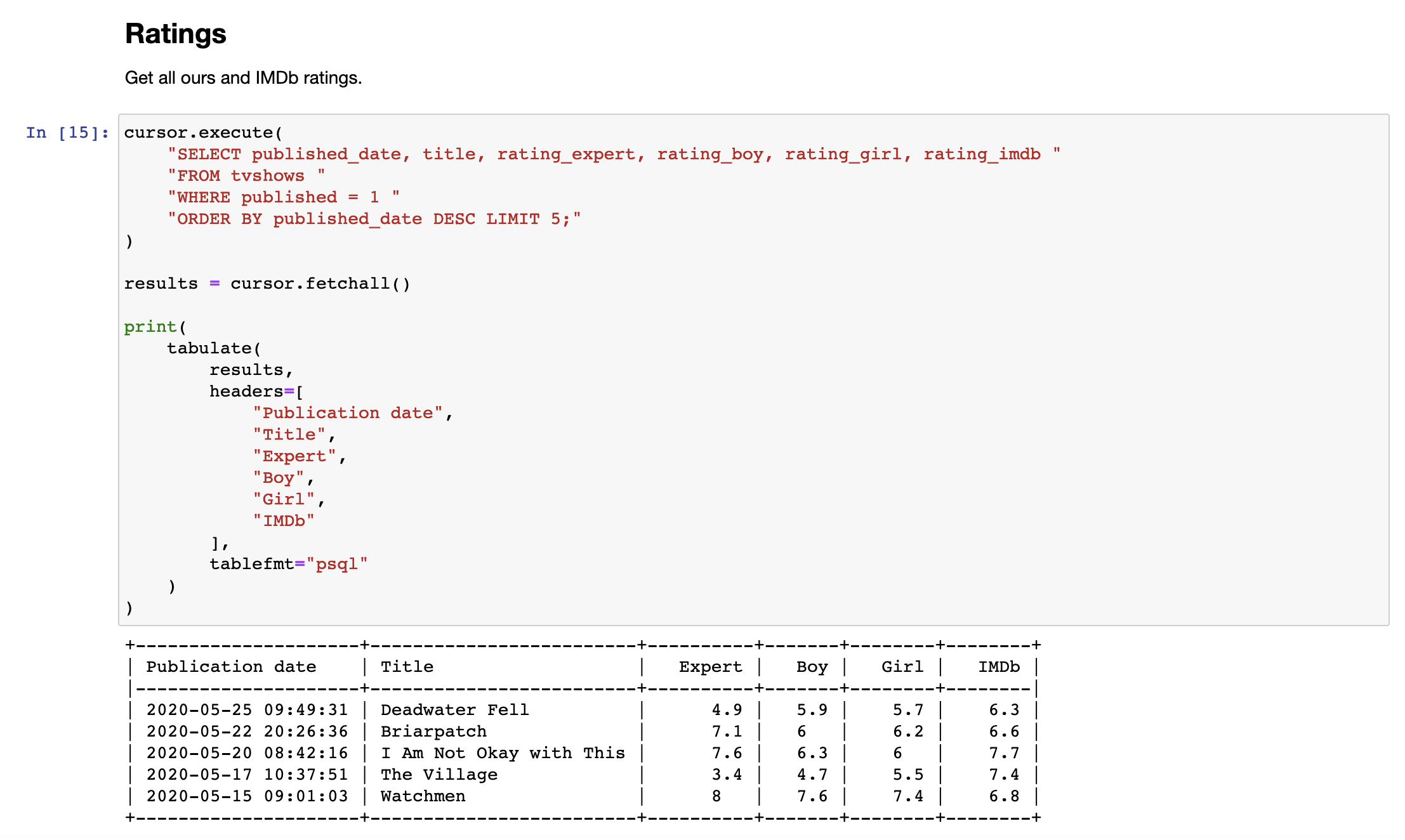

Configuring PySpark with Jupyter and Apache Sparkīefore configuring PySpark, we need to have Jupyter and Apache Spark installed. Furthermore, PySpark supports most Apache Spark features such as Spark SQL, DataFrame, MLib, Spark Core, and Streaming. PySpark allows Python to interface with JVM objects using the Py4J library. It allows users to write Spark applications using the Python API and provides the ability to interface with the Resilient Distributed Datasets (RDDs) in Apache Spark. Python connects with Apache Spark through PySpark. (See why Python is the language of choice for machine learning.) PySpark for Apache Spark & Python While projects like almond allow users to add Scala to Jupyter, we will focus on Python in this post. However, Python is the more flexible choice in most cases due to its robustness, ease of use, and the availability of libraries like pandas, scikit-learn, and TensorFlow. However, most developers prefer to use a language they are familiar with, such as Python. Scala is the ideal language to interact with Apache Spark as it is written in Scala. ) How to connect Jupyter with Apache Spark (Read our comprehensive intro to Jupyter Notebooks. Jupyter also supports Big data tools such as Apache Spark for data analytics needs. JupyterLab is the next-gen notebook interface that further enhances the functionality of Jupyter to create a more flexible tool that can be used to support any workflow from data science to machine learning. Jupyter supports over 40 programming languages and comes in two formats: This approach is highly useful in data analytics as it allows users to include all the information related to the data within a specific notebook. The beauty of a notebook is that it allows developers to develop, visualize, analyze, and add any kind of information to create an easily understandable and shareable single file. A Notebook is a shareable document that combines both inputs and outputs to a single file. Jupyter is an interactive computational environment managed by Jupyter Project and distributed under the modified BSB license. Use the right-hand menu to navigate.) How Jupyter Notebooks work (This tutorial is part of our Apache Spark Guide. Yet, how can we make a Jupyter Notebook work with Apache Spark? In this post, we will see how to incorporate Jupyter Notebooks with an Apache Spark installation to carry out data analytics through your familiar notebook interface. When considering Python, Jupyter Notebooks is one of the most popular tools available for a developer.

Spark offers developers the freedom to select a language they are familiar with and easily utilize any tools and services supported for that language when developing. Unlike many other platforms with limited options or requiring users to learn a platform-specific language, Spark supports all leading data analytics languages such as R, SQL, Python, Scala, and Java. All these capabilities have led to Spark becoming a leading data analytics tool.įrom a developer perspective, one of the best attributes of Spark is its support for multiple languages. Moreover, Spark can easily support multiple workloads ranging from batch processing, interactive querying, real-time analytics to machine learning and graph processing. Spark utilizes in-memory caching and optimized query execution to provide a fast and efficient big data processing solution. Apache Spark is an open-source, fast unified analytics engine developed at UC Berkeley for big data and machine learning.

0 kommentar(er)

0 kommentar(er)